Skip to content

GitLab

Explore

Sign in

Primary navigation

Search or go to…

Project

S

sab_pybasicbayes

Manage

Activity

Members

Labels

Code

Merge requests

Repository

Branches

Commits

Tags

Repository graph

Compare revisions

Snippets

Build

Pipelines

Jobs

Pipeline schedules

Artifacts

Deploy

Releases

Package registry

Model registry

Operate

Environments

Terraform modules

Analyze

Value stream analytics

Contributor analytics

CI/CD analytics

Repository analytics

Model experiments

Help

Help

Support

GitLab documentation

Compare GitLab plans

Community forum

Contribute to GitLab

Provide feedback

Keyboard shortcuts

?

Snippets

Groups

Projects

Show more breadcrumbs

Sebastian Bruijns

sab_pybasicbayes

Commits

1d0a42ca

Commit

1d0a42ca

authored

6 years ago

by

Matthew Johnson

Browse files

Options

Downloads

Patches

Plain Diff

update readme image links

parent

cdb3a911

No related branches found

Branches containing commit

No related tags found

No related merge requests found

Changes

1

Hide whitespace changes

Inline

Side-by-side

Showing

1 changed file

README.md

+3

-3

3 additions, 3 deletions

README.md

with

3 additions

and

3 deletions

README.md

+

3

−

3

View file @

1d0a42ca

...

...

@@ -44,7 +44,7 @@ plt.plot(data[:,0],data[:,1],'kx')

plt

.

title

(

'

data

'

)

```

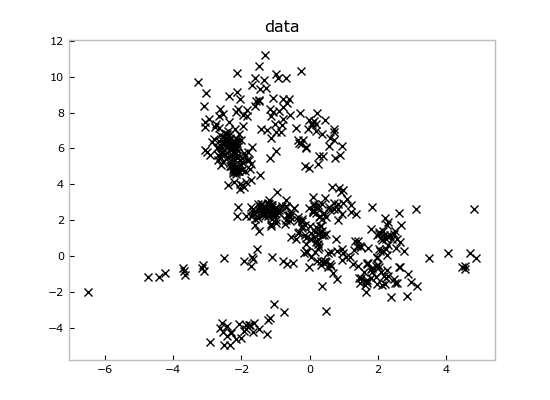

Imagine we loaded these data from some measurements file and we wanted to fit a

mixture model to it. We can create a new

`Mixture`

and run inference to get a

...

...

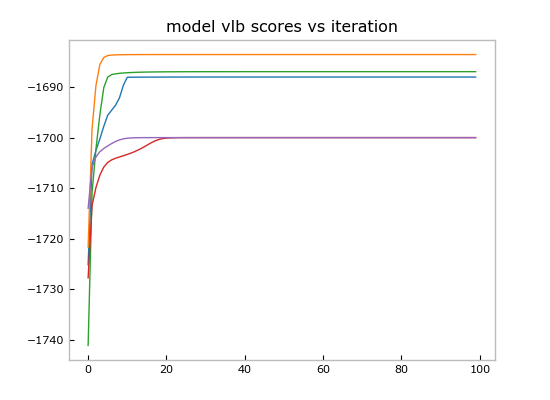

@@ -95,7 +95,7 @@ for scores in allscores:

plt

.

title

(

'

model vlb scores vs iteration

'

)

```

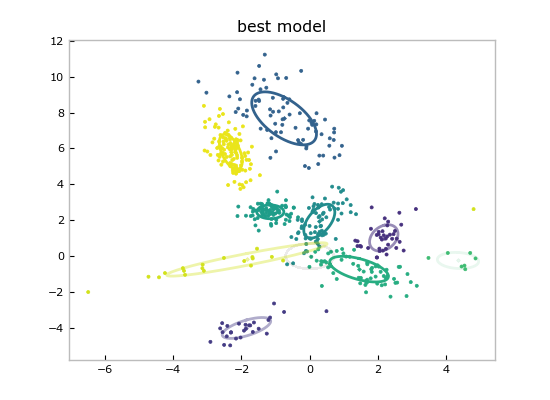

And show the point estimate of the best model by calling the convenient

`Mixture.plot()`

:

...

...

@@ -104,7 +104,7 @@ models_and_scores[0][0].plot()

plt

.

title

(

'

best model

'

)

```

Since these are Bayesian methods, we have much more than just a point estimate

for plotting: we have fit entire distributions, so we can query any confidence

...

...

This diff is collapsed.

Click to expand it.

Preview

0%

Loading

Try again

or

attach a new file

.

Cancel

You are about to add

0

people

to the discussion. Proceed with caution.

Finish editing this message first!

Save comment

Cancel

Please

register

or

sign in

to comment