From 1d0a42ca4c64bfe2ecaf5d7425ac7b0e8db66e49 Mon Sep 17 00:00:00 2001

From: Matthew Johnson <mattjj@csail.mit.edu>

Date: Sun, 11 Nov 2018 16:20:26 -0800

Subject: [PATCH] update readme image links

---

README.md | 6 +++---

1 file changed, 3 insertions(+), 3 deletions(-)

diff --git a/README.md b/README.md

index 1c05dde..baa0ebb 100644

--- a/README.md

+++ b/README.md

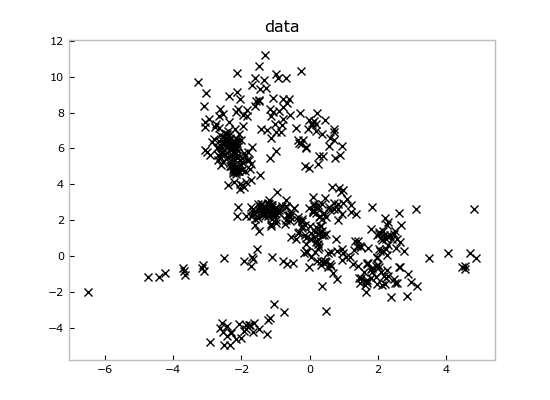

@@ -44,7 +44,7 @@ plt.plot(data[:,0],data[:,1],'kx')

plt.title('data')

```

-

+

Imagine we loaded these data from some measurements file and we wanted to fit a

mixture model to it. We can create a new `Mixture` and run inference to get a

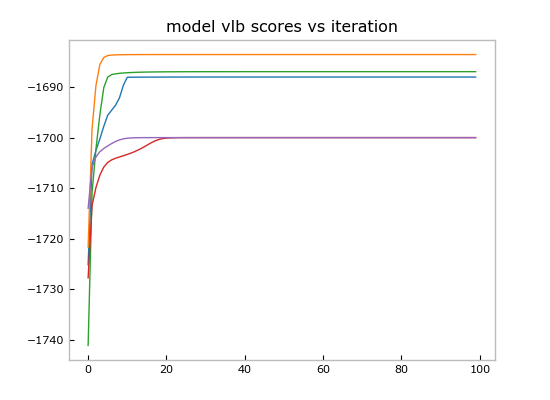

@@ -95,7 +95,7 @@ for scores in allscores:

plt.title('model vlb scores vs iteration')

```

-

+

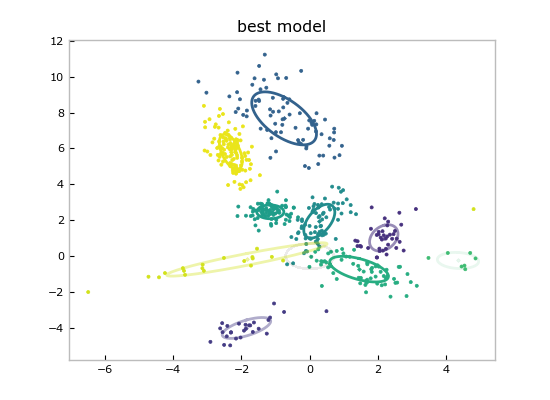

And show the point estimate of the best model by calling the convenient `Mixture.plot()`:

@@ -104,7 +104,7 @@ models_and_scores[0][0].plot()

plt.title('best model')

```

-

+

Since these are Bayesian methods, we have much more than just a point estimate

for plotting: we have fit entire distributions, so we can query any confidence

--

GitLab